一、实验拓扑图

实验原理:

前端用户访问VIP,两个apache其中一个做为服务运行挂载nfs提供web服务,其中一个apache基于corosync同步另外一个apache的心跳信息,当被监控的那台apache宕机,这太apache服务立马顶上去。

本次实验比较粗糙,只对apache做了高可用,不考虑到后端nfs服务的单点故障,不过在后续的博客里面会做完全的补充

ansible只是方便在一个设备上对所有的节点进行管理

实现过程:

本文定义了3种资源,VIP、WEB服务、NFS

定义资源的时候将这三种资源绑定到一起不能作为分开的服务在不同的节点上运行,这样就不能提供WEB服务了,并定义启动顺序 VIP、NFS、WEB

给两个apache设置不同的网页方便验证(一个宕机,另外一个apache服务立刻可以作为备用被访问)

我们的客户端在windows进行访问

二、配置实现过程

准备4台服务器:一台作为ansible,两台apache,一台NFS

ansible:172.16.249.207

apache:172.16.39.2 172.16.39.3

NFS:172.16.39.4

VIP: 172.16.39.100

首先配置ansible可以多两个apache和nfs实现远程管理,ansible是基于SSH管理的。

这里我们要基于openssl对apache和nfs互信,这样后续的ansible操作就不需要输入密码(总输入密码很讨厌)

首先在ansible生成密钥

[iyunv@station139 ~]# ssh-keygen -t rsa -P '' 密码为空,P为大写,后面跟一个空格

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

/root/.ssh/id_rsa already exists.

Overwrite (y/n)? yes

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

The key's randomart image is:

+--[ RSA 2048]----+

| oo +.o.o. |

| oo B o + |

| . B = o * |

| E o = * . |

| . S o |

| . |

| |

| |

| |

+-----------------+

接着把密钥分别复制给其他三个服务器

The authenticity of host '172.16.39.2 (172.16.39.2)' can't be established.RSA key fingerprint is c7:65:26:ff:5e:11:b9:de:a4:7a:20:9d:a2:28:e4:66.Are you sure you want to continue connecting (yes/no)? yesWarning: Permanently added '172.16.39.2' (RSA) to the list of known

hosts.root@172.16.251.254's password:

Now try logging into the machine, with "ssh 'root@172.16.251.254'", and check in:

.ssh/authorized_keys

to make sure we haven't added extra keys that you weren't expecting.

The authenticity of host '172.16.39.3 (172.16.39.3)' can't be established.RSA key fingerprint is 82:fa:a7:34:ba:20:d3:14:ab:70:9d:29:fc:d0:af:92.Are you sure you want to continue connecting (yes/no)? yesWarning: Permanently added '172.16.39.3' (RSA) to the list of known

hosts.root@172.16.251.130's password:

Now try logging into the machine, with "ssh 'root@172.16.251.130'", and check in:

.ssh/authorized_keys

to make sure we haven't added extra keys that you weren't expecting.

[iyunv@station139 ~]# ssh-copy-id -i .ssh/id_rsa.pub

root@172.16.39.4The authenticity of host '172.16.251.153 (172.16.251.153)' can't be established.RSA key fingerprint is 7f:08:c0:6d:f6:fe:0a:ec:51:cf:12:d4:bf:e4:3b:c5.Are you sure you want to continue connecting (yes/no)? yesWarning: Permanently added '172.16.251.153' (RSA) to the list of known

hosts.root@172.16.251.153's password:

Now try logging into the machine, with "ssh 'root@172.16.251.153'", and check in:

.ssh/authorized_keys

to make sure we haven't added extra keys that you weren't expecting.

我们尝试在ansible主机上给172.16.39.4主机随便发送一文件,看看是否提示输入密码,如下:显然不需要

[iyunv@station139 ~]# scp /etc/fstab

root@172.16.39.4:/tmp/fstab 100% 1010 1.0KB/s 00:00

2、下载ansible,接着配置ansible的配置文件加入这些主机到ansible的工作组中:

[iyunv@station139 ~]# ls

anaconda-ks.cfg ansible-1.5.4-1.el6.noarch.rpm install.log install.log.syslog

[iyunv@station139 ~]# yum -y install ansible-1.5.4-1.el6.noarch.rpm

Installed:

ansible.noarch 0:1.5.4-1.el6

Dependency Installed: 会依赖很多包,我通过yum安装,会自动解决依赖关系,自动安装依赖的包

PyYAML.x86_64 0:3.10-3.el6 libyaml.x86_64 0:0.1.3-1.el6

python-babel.noarch 0:0.9.4-5.1.el6 python-crypto.x86_64 0:2.0.1-22.el6

python-jinja2.x86_64 0:2.2.1-1.el6 python-paramiko.noarch 0:1.7.5-2.1.el6

要配置高可用集群之前,应该让所以的节点都可以互相解析,并且正反解析的结果和“uname -n”的结果一样

配置提供服务的三台主机的/etc/ansible/hosts 文件内容应该相同如下:

[iyunv@node1 ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.16.0.1 server.magelinux.com server

172.16.39.2 node1.corosync.com

172.16.39.3 node2.corosync.com

172.16.39.4 node3.corosync.com

注释掉所以的行,手动加入如下配置:

[webservice]

node1.corosync.com

node2.corosync.com

[webstore]

node3.corosync.com

在ansible中配置三台服务器的时间同步

[iyunv@node ~]# ansible webstore -m shell -a "ntpdate 172.16.0.1"

node3.corosync.com | success | rc=0 >>

23 Apr 13:08:55 ntpdate[1923]: adjust time server 172.16.0.1 offset 0.000863 sec

[iyunv@node ~]# ansible webservice -m shell -a "ntpdate 172.16.0.1"

node1.corosync.com | success | rc=0 >>

23 Apr 13:08:59 ntpdate[2036]: adjust time server 172.16.0.1 offset -0.000901 sec

node2.corosync.com | success | rc=0 >>

23 Apr 13:08:59 ntpdate[2077]: adjust time server 172.16.0.1 offset -0.000932 sec

是不是方便啊,在一台主机上配置直接全部主机都可以完成

配置apache两个主机安装corosync、pacemaker

[iyunv@node ~]# ansible webservice -m yum -a "name=corosync state=present"

[iyunv@node ~]# ansible webservice -m yum -a "name=pacemaker state=present"

在ansible主机上安装corosync,配置其配置文件,还有corosync-keygen实现apache之间的互信

corosync的配置和corosync-keygen的设置,之前的博客中有

这里稍微给点操作

[iyunv@node ~]# vim /etc/corosync/corosync.conf

[iyunv@node ~]# corosync-keygen

Corosync Cluster Engine Authentication key generator.

Gathering 1024 bits for key from /dev/random.

Press keys on your keyboard to generate entropy.

Writing corosync key to /etc/corosync/authkey.

[iyunv@node ~]# ansible webservice -m copy -a "src=/etc/corosync/authkey dest=/etc/corosync"

node1.corosync.com | success >> {"changed": true, "dest": "/etc/corosync/authkey", "gid": 0, "group": "root", "md5sum": "b8db2726121363c4695615d8cc8ce1ff", "mode": "0644", "owner": "root", "size": 128, "src": "/root/.ansible/tmp/ansible-tmp-1398234483.99-122742266566782/source", "state": "file", "uid": 0

}

node2.corosync.com | success >> {"changed": true, "dest": "/etc/corosync/authkey", "gid": 0, "group": "root", "md5sum": "b8db2726121363c4695615d8cc8ce1ff", "mode": "0644", "owner": "root", "size": 128, "src": "/root/.ansible/tmp/ansible-tmp-1398234484.2-198090798386440/source", "state": "file", "uid": 0

}

[iyunv@node ~]# ansible webservice -m copy -a "src=/etc/corosync/corosync.conf dest=/etc/corosync"

node1.corosync.com | success >> {"changed": true, "dest": "/etc/corosync/corosync.conf", "gid": 0, "group": "root", "md5sum": "906edcbefac607609795d78c0da57be3", "mode": "0644", "owner": "root", "size": 519, "src": "/root/.ansible/tmp/ansible-tmp-1398234502.27-114754154513206/source", "state": "file", "uid": 0

}

node2.corosync.com | success >> {"changed": true, "dest": "/etc/corosync/corosync.conf", "gid": 0, "group": "root", "md5sum": "906edcbefac607609795d78c0da57be3", "mode": "0644", "owner": "root", "size": 519, "src": "/root/.ansible/tmp/ansible-tmp-1398234502.61-246106036244835/source", "state": "file", "uid": 0

}

做完认证和复制完配置文件,然后启动corosync

[iyunv@node ~]# ansible webservice -m service -a "name=corosync state=started"

node2.corosync.com | success >> {"changed": true, "name": "corosync", "state": "started"

}

node1.corosync.com | success >> {"changed": true, "name": "corosync", "state": "started"

}

可以在apache上验证下

[iyunv@node2 yum.repos.d]# service corosync status

corosync (pid 23670) is running...

到此corosync配置完毕

接着给两个apache都安装上crm(CRM的配置接口)来配置资源

ansible主机上有crm的安装包,我们把他传到两个主机上,然后分别安装

只给出操纵步骤,就不再给出结果了

[iyunv@node ~]# ansible webservice -m copy -a "src=/root/crmsh-1.2.6-4.el6.x86_64.rpm dest=/tmp"

[iyunv@node ~]# ansible webservice -m copy -a "src=/root/pssh-2.3.1-2.el6.x86_64.rpm dest=/tmp"

然后分别在apache上安装 crmsh 和 pssh

[iyunv@node ~]# ansible webservice -a "rpm -i /tmp/pssh-2.3.1-2.el6.x86_64.rpm"

node1.corosync.com | success | rc=0 >>

node2.corosync.com | success | rc=0 >>

[iyunv@node ~]# ansible webservice -a "rpm -i /tmp/crmsh-1.2.6-4.el6.x86_64.rpm"

node1.corosync.com | FAILED | rc=1 >>

error: Failed dependencies: 出错是因为有依赖关系,所以下面改成yum安装

python-dateutil is needed by crmsh-1.2.6-4.el6.x86_64

python-lxml is needed by crmsh-1.2.6-4.el6.x86_64

redhat-rpm-config is needed by crmsh-1.2.6-4.el6.x86_64

node2.corosync.com | FAILED | rc=1 >>

error: Failed dependencies:

python-dateutil is needed by crmsh-1.2.6-4.el6.x86_64

python-lxml is needed by crmsh-1.2.6-4.el6.x86_64

[iyunv@node ~]# ansible webservice -m yum -a "name=/tmp/crmsh-1.2.6-4.el6.x86_64.rpm state=present"

此时此刻没有ansible的事情了,我们下来就要去DC上去配置了,就是随便一台apache主机上

当然了先把nfs服务器配置好后面要用到:

[iyunv@node3 yum.repos.d]# mkdir /web

[iyunv@node3 yum.repos.d]# vim /etc/exports

[iyunv@node3 yum.repos.d]# service nfs start

Starting NFS services: exportfs: Failed to stat /www: No such file or directory

[ OK ]

Starting NFS quotas: [ OK ]

Starting NFS mountd: [ OK ]

Starting NFS daemon: [ OK ]

Starting RPC idmapd: [ OK ]

[iyunv@node3 yum.repos.d]# vim /etc/exports

/web 172.16.39.0/24(rw)

我们在apache可以查看下有没有发现NFS这个设备

[iyunv@node2 yum.repos.d]# showmount -e 172.16.39.4

Export list for 172.16.39.4:

/www 172.16.39.0/24

2、接着就在apache上运行crm来定义资源了

首先,应该禁用stonith,因为我们没有这个设备

接着定义不具有法定票数如何执行

[iyunv@node1 ~]# crm

crm(live)# configure

crm(live)configure# property stonith-enabled=false

crm(live)configure# property no-quorum-policy=ignore

crm(live)configure# verify

crm(live)configure# commit

应该把所以的资源先定义,定义VIP apache NFS,定义为一个组,定义优先级

[iyunv@node1 ~]# crm

crm(live)# configure

crm(live)configure# primitive webip ocf:heartbeat:IP

IPaddr IPaddr2 IPsrcaddr

crm(live)configure# primitive webip ocf:heartbeat:IPaddr params ip=172.16.39.100 op monitor interval=30s timeout=20s on-fail=restart

crm(live)configure# verify

crm(live)configure# primitive webstore ocf:heartbeat:Filesystem params device="172.16.39.4:/web" directory="/var/www/html" fstype="nfs" op monitor interval=20s timeout=40s op start timeout=60s op stop timeout=60s on-fail=restart

crm(live)configure# verify

crm(live)configure# primitive webserver lsb:httpd op monitor interval=30s timeout=20s on-fail=restart

crm(live)configure# verify

crm(live)configure# group webserver webip webstore webserver

ERROR: webserver: id is already in use

crm(live)configure# group webservice webip webstore webserver

crm(live)configure# order webip_before_webstore_before_webserver mandatory: webip webstore webserver

crm(live)configure# verify

crm(live)configure# commit

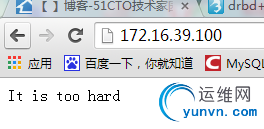

验证:

看下nfs中的网页内容

[iyunv@node3 web]# cat index.html

It is too hard

验证web服务

关闭apache 上的web服务